The Tower of Babble

Some musings on ChatGPT, the miraculous AI text generator

On November 30th, the machine learning company OpenAI unleashed a chatbot service called ChatGPT on an unsuspecting world. ChatGPT can produce impressive responses to user ‘prompts’.

Let’s ask it how it works. In the excerpts, the text in boldface is my prompt, and the rest is the reply from ChatGPT.

Explain in a list how chatbots based on large language models work.

A chatbot based on a large language model is a type of artificial intelligence (AI) that uses a natural language processing (NLP) system to generate responses to user input.

The chatbot's responses are generated using a pre-trained language model, which is a machine learning algorithm that has been trained on a large dataset of text. This allows the chatbot to understand the context and meaning of the user's input.

When a user types a message to the chatbot, the message is first passed through the pre-trained language model. This allows the chatbot to understand the meaning and intent of the user's message.

The chatbot then uses its knowledge and understanding of the user's input to generate a response. This response is typically in the form of a natural-sounding text message, but it could also be in the form of an image, a video, or some other form of media.

The chatbot's response is then sent back to the user, completing the conversation. This process can be repeated indefinitely, allowing the chatbot to engage in conversations with multiple users at the same time.

As you can see, the response is relevant and coherent. Non-experts will of course wonder whether it is correct. I am not an expert on machine learning but I know enough to recognize that this is a perfectly adequate explanation. I would not have been able to tell if it was written by a human or not. What is debatable is whether terms like “understand” and “knowledge” are appropriate for describing a glorified statistical model. We’ll get to this debate in a moment, and why I think there are more interesting conversations to be had.

ChatGPT is a way for users to interact with GPT-3.5, the latest version of OpenAI’s General Pretrained Transformer. It’s a type of artificial neural network trained on vast quantities of text. It’s job during training is to predict the next word, given its history of ‘experience’ with text. The way it retains a trace of this history is by using prediction errors to tweak its billions of connection weights (which are loosely inspired by the synapses linking real neurons in brains).

Is it impressive? Yes.

ChatGPT represents a qualitative leap forward for large language models (LLMs). To appreciate this, it helps to see how a previous iteration of GPT did. When GPT-2 came out, my friends and I were obsessed with it — largely because of how bizarre the outputs were1. It produced grammatical sentences with little coherence or structure. Even with scare quotes, no one would have used “understanding” to describe what it did.

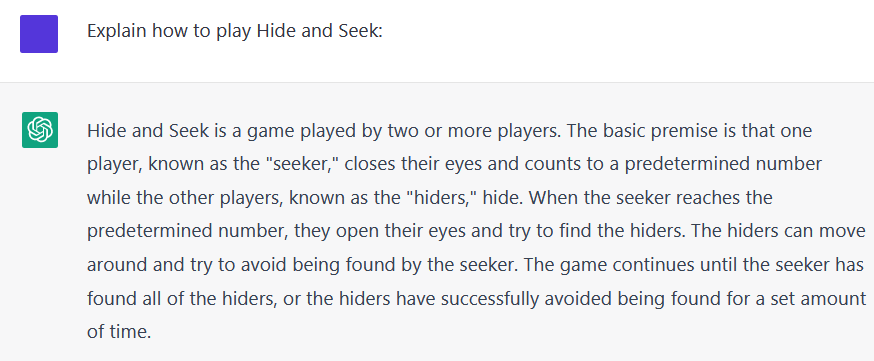

Here’s GPT-2 attempting to explain how Hide and Seek works:

ChatGPT had no trouble:

ChatGPT is not just spitting out remembered text, however. It can generate sentences that have never been written before (as far as I can tell from Google string searches). It also maintains very impressive long-range order: it can write stories that are quite coherent, even though they tend to be limp and clichéd.

Amazingly, you can also get it to write explanations of technical concepts… in verse. The rhymes are often quite bad, and the scansion is terrible, but the GPT doggerel is still startlingly impressive. Here is one explaining the backpropagation algorithm, which is used to train artificial neural networks such as GPT itself:

And here is an explanation of Newton’s Law of Gravitation in the style of a pirate.

How about that joke at the end? Definitely not just a copy-paste from the internet.

Is it dangerous? Yes.

A lot of commentary about ChatGPT centers around the various ways it can be harmful. It is capable of producing accurate summaries of scientific concepts, but it can also drift into nonsense, and even make-up non-existent citations. Anyone using a model like this has to be extra vigilant to check facts and watch out for logical or ethical lapses. Vigilance is perennially in low supply, so I don’t blame people for thinking of this as another portent of the impending epistemic apocalypse. But ChatGPT can crank out acceptable essays on almost any mainstream topic, so teachers are worried that students will use it to cheat on homework2.

Also, despite some improvements in safety filtering, it is still easy to get it to generate text that is racist, sexist or bigoted towards marginalized people. So if — or more likely, when — such services become widely available, people will suddenly be able to produce textual horrors at industrial scale: harassment, spam, fake news, and propaganda. In quantity, such text can even alter search engine results, so Google (whose results have been declining in quality anyhow) will have its work cut out for it. And it’s important to note that many of the people who report problems with LLMs are unpaid volunteers3.

GPT can also generate computer code, which means it could serve as a tool for software engineers. Or, more worryingly, it could serve as a replacement for software engineers. Here I asked ChatGPT to generate python code which can produce a surface plot. Note the explanation of the code. Clearly this kind of technology has the potential to be used to teach people how to code.

I ran it in Google Colaboratory and it worked without needing any corrections:

Is it conscious? Is it sentient? Maybe this doesn’t matter.

Not too long ago, an employee of Google triggered a major controversy for suggesting that one of Google’s large language models, LAMDA, was conscious. His thoughts on this were larded with idiosyncratic religious ideas, tickling technophiles, technophobes and the philosophically circumspect in just the right zones.

With ChatGPT, however, I have not yet come across anyone claiming that the software is conscious. Instead, people are arguing about whether GPT’s demonstrably stunning abilities illustrate “real” understanding and knowledge, or “mere” statistical correlation. In my interactions with ChatGPT, I see no evidence of a personality or emotion, and only a thin veneer of common sense. The skills on display are both human-like and incredibly alien. It is the latter aspect that fascinates me.4

The sheer number of free parameters in a GPT-style model, combined with the nature of the training (predict the next word) suggests to me that there is no substantive commonality between how ChatGPT produces coherent text and how a human does. Humans do not learn to produce language by completing other peoples’ sentences: our language use is embedded in a wider web of nonverbal actions, reactions and experiences. Yes, humans are capable of confabulation, overconfidence, and mindless imitation.

But just because something walks like a duck and talks like a duck does not mean it’s a duck.

I think what is interesting about GPT and other large language models is not what they imply about artificial ‘minds’, but what they suggest about the nature of the human textual corpus.

A second Parthenon of words?

In Ardor, Roberto Calasso explores the strange world of Ancient Sanskrit texts. He points out that the anonymous authors of these texts left almost no material traces: no ruins, no temples. Instead, they left a “Parthenon of words”. For centuries, scholars have wandered through this Parthenon, trying to unearth old meanings and detect resonances with new ones.

Large language models may offer us radically new ways to explore the Parthenon of text that humanity has collectively produced 5. No single human being can read even a tiny fraction of the training data baked into the connection weights of GPT. Whether these weights constitute sentience or consciousness is a discussion that can wait until we have intelligent machines that seem to have agency: the set of quasi-stable goals and quirks that develop through embedded interaction in the actual world6. But I suspect that no mere software tool, however skilled it becomes at churning out text, will ever convince me of its sentience. And it is unlikely that companies with the resources to create such tools have any interest in machines that display agency: independent motivation might interfere with profit-maximization.

While we wait for our favorite sentient-AI movie plots to bleed into reality, we might be able to use models like GPT to gain new perspectives on the emergent structures latent in our corpus of texts. Human faculties find their funhouse mirror reflections in the LLMs. Lurking in these statistical pattern-detectors that share none of our evolutionary, cultural or experiential history, we find warped and cracked versions of memory, imagination, free-association, poesy, logic, and analogy, all stitched together with the ability to generate grammatical sentences. In my experience, ChatGPT seems capable of creating structural templates: this suggests that somewhere in its weights we may find implicit representations of abstract concepts. How else could it write roughly accurate descriptions of backprop (or Hebbian learning) in a variety of styles?

These implicit representations need not resemble our own explicit concepts or our unconscious neural representations7 at all — in fact, we may learn more from them if they don’t. It may be that GPT’s statistical ‘knowledge’, despite superficial resemblances to our own skill-set, is orthogonal to natural intelligence: the ‘machine unconscious’ may be a completely different path through the high-dimensional space that is our textual jungle. For this reason it may stimulate new abstractions in us. Future GPT-whisperers may be able to discern novel patterns in complex data, harmonizing two modes of intelligence to produce a new 'cyborg stereo vision'.8

For now, it may be easier to get a taste of unanticipated connections by examining the outputs of AI image generators. Tools like DALL-E and MidJourney produce art that can remix styles and subjects in dizzying combinations. These tools often use visual elements to “illustrate” common features of seemingly disparate ideas provided in the prompt. In the image below, the prompt I provided was “Polish Star Wars poster featuring an elephant”. In three of the generated images, it seems to have landed on a latent similarity between elephants and the AT-AT walkers from The Empire Strikes Back. I had no inkling of this when I wrote the prompt.

The Tower of Babble is a byzantine complex of ungrounded symbols9 that is too vast to navigate on foot. But with LLMs we can teleport into the labyrinth at random; perhaps we will find unexpected chambers and secret passages. ‘Prompt engineering’ — the skilled crafting of inputs into LLMs and other machine learning models — may become a sought-after skill. The best prompt engineers will be more like prompt poets, supplying the vitality and caprice required to find hidden structures in the labyrinth.

(Hopefully the Tower doesn’t come crashing down on our heads before we can learn something useful from it10.)

Notes

I’ve collected more examples of GPT-2 howlers in this twitter thread.

In high school in India, we only had to write essays during exams, and they were handwritten. I doubt things have changed all that much. Teachers in India and other poorer countries are probably not worried about this form of cheating. (‘Traditional’ cheating is, of course, still rampant. :P) It would be nice if the purveyors of this technology made all AI-generated text available to plagiarism detectors, but that could become expensive from a storage perspective, and also a nightmare for privacy.

Abeba Birhane and Deborah Raji have written a good essay on the various problems with GPT and its ilk: ChatGPT, Galactica, and the Progress Trap.

“OpenAI CEO Sam Altman appealed to the community of users to help improve the model. Such crowdsourced audits, especially when solicited, are not new modes of accountability—engaging in such feedback constitutes labor, albeit uncompensated labor. People at the margins of society who are disproportionately impacted by these systems are experts at vetting them, due to their lived experience.”

Perhaps my experience is a higher-dimensional version of an uncanny valley. An unsettling saddle point?

The training data is biased, of course, towards Western — and specifically English-language — texts that have been digitized.

I recently gave a talk on dynamical systems theory in neuroscience. I focused on the importance of stability when thinking about behavior. Here’s the YouTube link:

Cognitive and behavioral attractors: dynamical systems theory as a lens for systems neuroscience

For more on neural representations, which turn out to be a controversial topic in some circles, see these two essays:

(1) In defense of placeholders: the case of ‘representation’

Also, perhaps we have invented a statistico-semiotic hyperobject?

I speculate about the nature of symbolic processing here:

I firmly believe that GPT is just a stepping stone. I think the future for such tools is some form of symbios with humans where systems can be developed that will take full advantage of the goof attributes of each group member.

For example, tools like GPT are great at managing huge amounts of data and their pattern matching capabilities are very good at recognizing relationships that exist in this data, provided therre is significant similiarity between existing data and new data that is newly being analyzed. They are less good at creative imagination of possibilities that have not been seen before.

Humans, on te other hand, excel at this type of creative thinking but have severe limitations on the amount of data that they can handle.

I am not convinced that natural language is always the best medium of communication between humans and computers. I think that we need to devise new ways of communicating that are closer to the problem being examined. In particular, natural language has limitations in communicating complex structural information and our graphical interfaces are currently very human-centric and rely heavily on the byproducts of the perception of vision.

Working to develop these possibilities should provide an interesting time for the near future.